GraphQL file uploads - evaluating the 5 most common approaches

We're hiring!

We're looking for Golang (Go) Developers, DevOps Engineers and Solution Architects who want to help us shape the future of Microservices, distributed systems, and APIs.

By working at WunderGraph, you'll have the opportunity to build the next generation of API and Microservices infrastructure. Our customer base ranges from small startups to well-known enterprises, allowing you to not just have an impact at scale, but also to build a network of industry professionals.

One question that keeps coming up in GraphQL communities is, how to upload files using GraphQL? This post should give you an overview of the different options available and how they compare.

Serving structured data is the core of GraphQL. Send a Query to the server, and you get a JSON Object back with exactly the structure you were asking for. What about files though? How do files fit into a Query Language for Data?

It's a common theme that starters are confused when they are asked to upload a JPEG or PDF file using GraphQL. Out of the box, there's nothing in the GraphQL specification that mentions files. So, what are the options available and when should we choose which one?

Overview of the 5 most common options to upload files with GraphQL APIs

Let's start with an overview of the different options:

- using GraphQL mutations with base64 encoded Blobs

- using GraphQL mutations with a multipart HTTP Requests

- using a separate REST API

- using S3

- WunderGraph's Approach using the TokenHandler Pattern with S3 as the storage

Throughout the post, you'll learn that - base64 encoded blobs is the simplest solution with some drawbacks - mutations with multipart HTTP Requests is the most complex one - using a separate REST API can be a clean solution but is unnecessary - because S3 is already the perfect API to upload files, it's just not ideal to directly expose it - which we will fix using the TokenHandler Pattern using WunderGraph

How to evaluate different GraphQL file upload solutions?

Before we dive into evaluating the different solutions, let's establish some metrics for "good" solutions:

- complexity of implementation on both client and server

- bandwidth overhead should be minimal

- uploads should be fast

- the solution should work across different languages and client- and server frameworks

- portability: it should work on your laptop as well as in the cloud

- no vendor lock in

- we should be able to easily make uploads secure

- once an upload is finished, we should be able to run server-side code

Comparison of the different options available to upload Files using GraphQL

Uploading files via a GraphQL using mutations with base64 encoded blobs

Let's start with the simplest solution, encoding the file as a base64 encoded blob.

StackOverflow has an example for us on how it works:

This reads a file and returns it as a base64 encoded string. You might be asking why base64 at all? The reason is, you cannot just send a file as part of a string. A JSON Object, which is used to send GraphQL Requests, is a string. If we want to send a file as part of this JSON Object, we first have to turn it into a text representation.

Ok, we understand the how and the why, let's see if this is a good solution.

The complexity of the implementation, as you can see above, is low. On the server side, you decode the JSON and then turn the base64 encoded string into its binary format again.

But there are a few problems with this solution. Base64 encoding increases the size of the file by roughly one third. So, instead of uploading 3 Megabytes, you have to upload 4. This doesn't scale well, especially not for large files.

Keep in mind that base64 encoded files are part of the enclosing JSON object. This means, you're not able to "stream" this base64 string through a decoder and into a file. Uploading one gigabyte of data using this method would result in one gigabyte occupied memory on the server.

If you're looking for a quick and dirty solution, it's a great choice. For production environments where a lot of API clients upload files, it's not a good match though.

Uploading files via a GraphQL using mutations with multipart HTTP Requests

Alright, we've learned that encoding files to ASCII is a quick solution but doesn't scale well. How about sending files in binary format? That's what HTTP Multipart Requests are meant for.

Let's have a look at a Multipart Request to understand what's going on:

A HTTP Multipart request can contain multiple "parts" separated by a boundary. Each part can have additional "Content-*" Headers followed by the body.

How to create a MultiPart Request from JavaScript?

It's simple, right? Take a (fake) list of Files, append all of them to the FormData Object and pass it to fetch as the body. JavaScript takes care of the boundaries, etc...

On the backend, you have to read all individual parts of the body and process them. You could send a dedicated part for the GraphQL Operation and additional parts for attached files.

Let's first talk about the benefits of this solution. We're sending the files not as ASCII text but in the binary format, saving a lot of bandwidth and upload time.

But what about the complexity of the implementation? While the client implementation looks straight forward, what about the server?

Unfortunately, there's no standard to handle Multipart Requests with GraphQL. This means, your solution will not be easily portable across different languages or implementations and your client implementation depends on the exact implementation of the server.

Without Multipart, any GraphQL client can talk to any GraphQL server. All parties agree that the protocol is GraphQL, so all these implementations are compatible. If you're using a non-standard way of doing GraphQL over Multipart HTTP Requests, you're losing this flexibility.

Next, how will your GraphQL client handle the Request? Do you have to add a custom middleware to rewrite a regular HTTP Request into a Multipart one? Is it easy to accomplish this with your GraphQL client of choice?

Another problem I see is that you have to limit the number of Operations that allow Multipart Requests. Should it be allowed for Queries and Subscriptions? Probably not. Should it be allowed for all Mutations? No, just for some of them, or even just for a single Mutation, the one to upload files. To handle this, you have to add custom logic to your GraphQL Server. This logic will make portability more complex as you'd have to re-implement this logic in another language.

Finally, you have the file as part of the Multipart Request. Where do you store it? That's another problem you have to solve. S3 is probably your best option if it should work both locally and in the cloud.

So, in terms of implementation complexity, this solution is quite heavy and has a lot of open questions.

Maybe it's simpler to just use a dedicated REST API?

Leaving data to GraphQL and handling file uploads with a dedicated REST API

This sounds like a solid idea. Instead of tightly coupling a custom GraphQL client to our custom GraphQL server, we could also just add a REST API to handle file uploads.

We use the same concepts as before, uploading the files using a Multipart Request.

Then, from the REST API handler, we take the files and upload them to S3 and return the response to the client.

With this solution, we're not tightly coupling a custom GraphQL client to our custom GraphQL server implementation as we leave the GraphQL protocol as is.

This solution is also fast and there's not much of a bandwidth overhead. It's also easily portable as we've not invented a custom GraphQL transport.

What are the tradeoffs though?

For one, authentication is an issue. If we're deploying the upload API as a second service, we have to find a solution that allows us to authenticate users across both the GraphQL and the REST API. If, instead, we're adding the REST API alongside the GraphQL API, just on a different endpoint, we're losing on portability again, but it's not as big of an issue as adding Multipart directly to the GraphQL API.

Another issue is complexity, We're establishing a custom protocol between client and server. We have to implement and maintain both of them. If we'd like to add another client to our implementation, using a different language, we're not able to use an off-the-shelf GraphQL client and call it a day. We'd have to add this extra piece of code to the client to make it work.

In the end, we're just wrapping S3. Why not just use S3 directly?

Combining a GraphQL API with a dedicated S3 Storage API

One of the issues of our custom solution is that we're establishing a custom protocol for uploading files. How about relying on an established protocol? How about just using S3? There are plenty of clients in all languages available.

With this approach, the GraphQL API stays untouched, and we're not inventing custom file upload protocols. We can use off-the-shelf GraphQL clients as well as standard S3 clients. It's a clear separation of concerns.

Well, there's another tradeoff. How do we do authentication?

Most guides suggest adding custom backend code to pre-sign upload URLs so that users from insecure environments, e.g. the Browser, are able to upload files without the need of a custom Authentication Middleware.

This adds some complexity, but it's doable. You could even add this logic as a Mutation to our GraphQL Schema. With this approach, the user can first create an attachment with metadata, which then returns a pre-signed URL to upload the file.

However, this leads to another problem. How do you know if the file was actually uploaded? You probably want to add some custom business logic to check S3 periodically if the file is successfully uploaded. If this is the case, you can update the attachment metadata in the GraphQL API.

Another issue with pre-signed S3 URLs is that you're not able to limit the upload file size. Attackers could easily spam you with big files and exhaust your storage limits.

Additionally, do you really want your API clients to directly talk to an API of the storage provider? From a security point of view, wouldn't it make more sense to not have them interact directly?

To sum it up, a dedicated S3 API comes with a lot of advantages over the previously discussed solutions, but it's still not the perfect solution. We can make it work, but it needs custom solutions to make it secure, validate the files are actually uploaded and to prevent large uploads.

Securely uploading files alongside GraphQL APIs using the TokenHandler Pattern

Looking at all the options we've discussed so far, we're able to make a wish list to guide us to the ultimate solution.

Base64 encoding files is out. The increase in upload bandwidth doesn't justify the simplicity. We definitely want to use Multipart file uploads. However, we don't want to customize our GraphQL API, that's an absolute No. The custom REST API sounds great, but it's also adding a lot of flexibility. That said, the idea of separating file uploads from the data layer really makes sense. Finally, using S3 as the storage is great, but we don't want to directly expose it to our users. Another important aspect is that we don't want to invent custom protocols and implement custom API clients, just to be able to upload files alongside standard GraphQL clients.

Taking all this into consideration, here's our final solution!

The WunderGraph Way of solving problems like this is to abstract away the complexity from the developer and to rely on open standards. We're using OpenID Connect as the standard for authentication and S3 as the standard protocol for uploading files. In addition, by using the TokenHandler Pattern, we're abstracting away the complexity of security into the server-side component, the WunderNode. Finally, we're generating a typesafe client to not just handle authentication and data access but also file uploads. All this results in the perfect abstraction that balances between developer experience and flexibility, without locking our users into specific implementations.

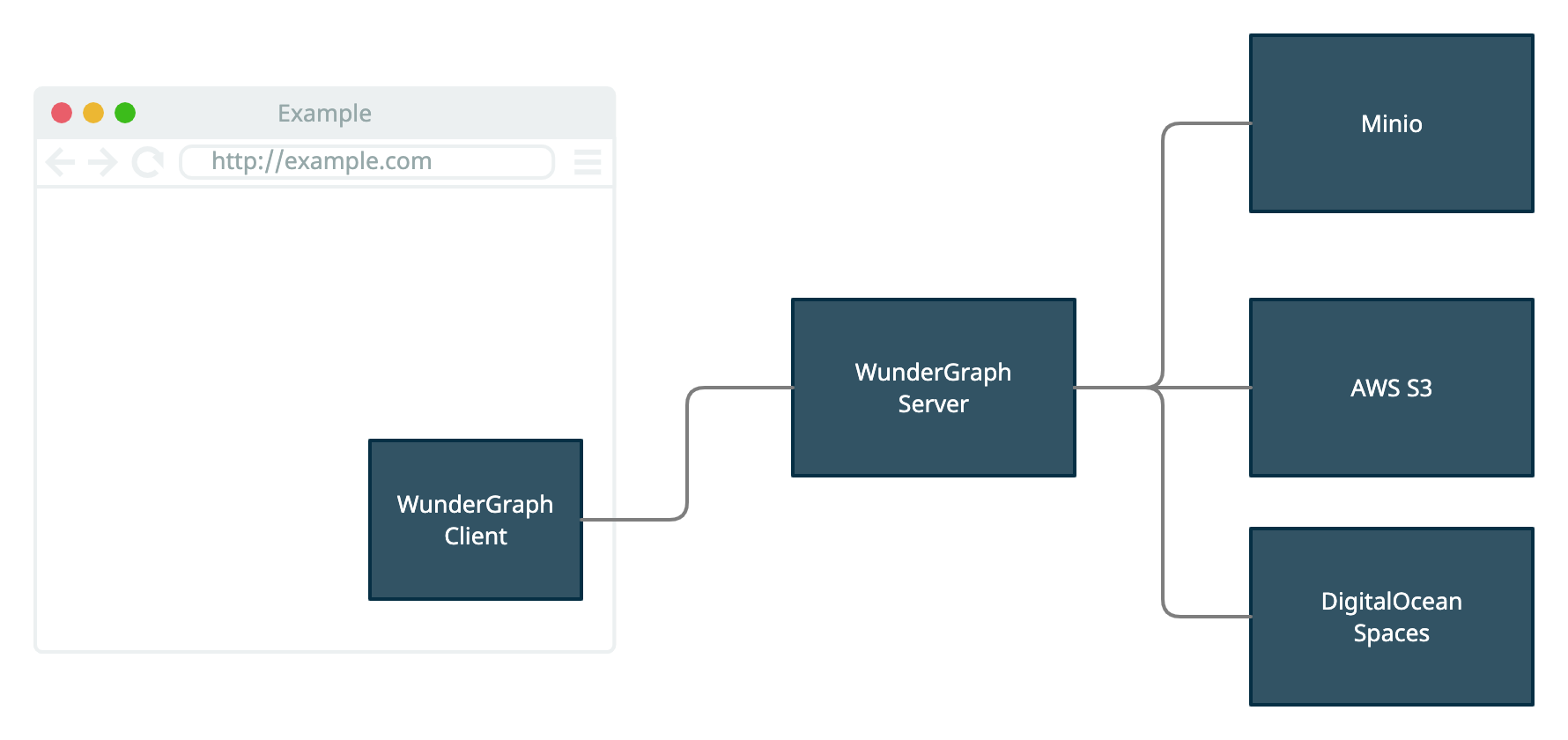

Let's look at an architecture diagram to get an overview:

The client on the left is generated. It lets you upload files without knowing much about it. It handles authentication and everything.

In the middle, we have the "TokenHandler", the WunderGraph Server, the WunderNode. It handles the server-side part of authenticating a user, uploading files, etc... We'll talk about the TokenHandler pattern in a second.

On the right side, we've got the storage providers. These could be Minio, running in Docker on your local Machine, or a cloud provider.

Let's talk about the TokenHandler Pattern!

If you want to be able to pre-sign URLs, the browser needs to be able to send some singed information alongside a request about the identity of the user. Signed means, the server needs to be able to trust this information.

There are different ways of solving this problem. One very popular approach is to let the user login via OpenID Connect and then use a Bearer Token. The problem with this approach is that if there is a Token available in the Browser, accessible to JavaScript, there's the possibility that the JavaScript code does something bad with the Token. Intentionally or not, if the token is accessible from JavaScript, there's a chance to risk security.

A better approach is to not handle the token on the client but on the server. Once the user has completed the OpenID Connect flow, the authorization code can be exchanged on the back channel (server to server) without exposing it to the client. The response, containing the identity information about the user, is never exposed to the client. Instead, it's encrypted using a secure HTTP only cookie, with strict SameSite settings to only allow it on first party domains.

Using the TokenHandler Pattern, the Browser sends information about the user alongside every request but is not able to touch or modify it. The server can trust the client, and we're not leaking and information to non first-party domains.

If you want to say so, the WunderGraph Server, also called WunderNode, is a TokenHandler. Well, it's not just that, it's a lot more, e.g. also a file upload handler.

Let's assume an application wants to upload files, how does the implementation look like?

The client comes with an uploadFiles function. We're able to choose between all configured upload providers. In this case, S3Provider.do was chosen because we've named one of our S3 providers do.

Everything else is handled already. We can check if the user is authenticated before allowing them to upload a file, and we're able to limit the size of the files they are intending to upload. Files will automatically be uploaded to the bucked we've defined in our configuration.

Speaking of the configuration, here's an example of how to configure S3 file uploads for a WunderGraph application:

What's left is to evaluate this solution against the criteria we've established at the beginning.

We configure the S3 storage provider and don't have to do anything on the server. The client is generated and comes with a function to easily upload files. So, complexity of the implementation is very low.

There's no bandwidth overhead as we're using Multipart. Additionally, the WunderGraph server streams all parts, meaning that we're not putting the whole file into memory. As we're not adding base64 encoding, uploads are quite fast.

As we're handling uploads in front of the backend, there are no changes required to it. Clients can be generated in any language and for every framework, allowing for easy portability of the solution.

Users of this solution are not locked into vendors. For authentication, you're free to choose any OpenID Connect Provider. For uploads, any S3 compatible storage provider works fine. You can use Minio on localhost using Docker, AWS S3, DigitalOcean or others.

Uploads are as secure as it can be by using the TokenHandler pattern. We're not exposing any user credentials to the client. We limit the upload file size. There's no way to leak pre-signed URLs if we don't use them.

Additionally, you're able to use WunderGraph Hooks to act once a file upload is finished. Just add your custom logic using TypeScript, call a mutation and update the Database, anything is possible.

Conclusion

I hope it's clear that uploading files for web applications is not as easy as it might sound. We've put a lot of thought into architecting a proper solution. Using the TokenHandler pattern we're able to offer a secure solution not just for handling data but also file uploads.

Depending on your use case, the simple base64 approach might work well for you.

Adding custom Multipart protocols to your GraphQL API should really be avoided as it's adding a lot of complexity.

A custom REST API might be a good solution if you have the resources to build it.

If you're looking for a battle tested ready to use solution, give WunderGraph's approach a try.

Try out the example to see uploads in action or watch the video to follow along.